Why your business should care about Cloudflare

Here are five business realities that make edge-native architecture essential for modern enterprises:

- 1

Revenue impact: Speed equals money

Sub-100ms response times globally translate directly to conversion rates. Amazon found that every 100ms of latency costs 1% of sales, edge-native architecture delivers consistent performance across all markets without complex multi-region deployments. - 2

Operational efficiency: Do more with Less

Edge-native eliminates infrastructure overhead that doesn't serve customers. Instead of managing server capacity and scaling policies, your team focuses on features that drive business value — engineering time redirected to innovation. - 3

Global market without infrastructure investment

Traditional cloud requires expensive multi-region setups for global performance. Edge-native means your application performs consistently worldwide from day one, when you're ready for new markets, your infrastructure already is. - 4

Risk Mitigation: Built-in resilience

DDoS attacks cost enterprises an average of £2M per incident. Edge-native architecture includes enterprise-grade security by default, your application stays online when competitors go dark. - 5

Future-proof AI strategy

AI processing at the edge means real-time personalisation and privacy-compliant intelligence without latency penalties. Edge-native applications deliver AI-powered experiences that customers actually notice and value.

Every 100ms in added page load time cost 1% in revenue

Amazon research

Still intrigued and into the technical nitty-gritty, feel free to read along but before we dive into the details a word or two on the Shift from Cloud-First to Edge-Native.

After delivering multiple enterprise solutions on both AWS, Azure and dedicated runtimes like Vercel, from BOSS Paints (Vercel) to Degroof-Petercam (Azure) and Century 21 (AWS), two years ago we've witnessed something fundamental: traditional cloud architectures, no matter how well-engineered, do introduce latency that affect your conversion and here's why.

So edge-native applications, you say?

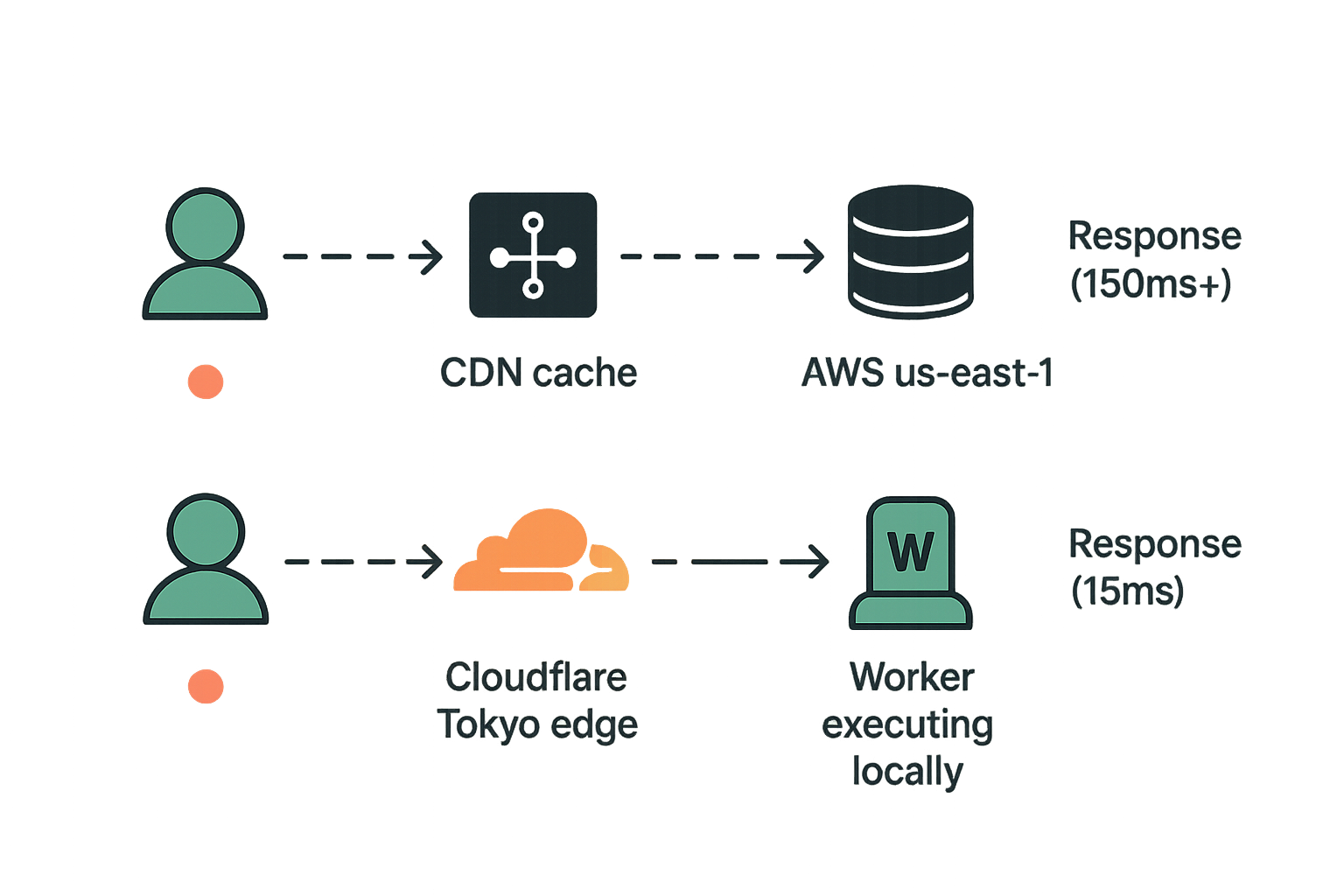

Edge-native applications are designed from the ground up to run at the network edge, not retrofitted from cloud-first designs. Instead of this:

Application logic AND data processing thus happen close to users on the Tokyo Edge (Cloudflare example), not just static content caching (AWS example) which is a speed advantage that can't be ignored.

Why AWS and Azure still matter (but are no longer our default go to)

We still adore AWS and Azure as they're phenomenal for complex, data-intensive applications with unmatched service breadth. But for the headless, composable, content-driven applications that define modern digital experiences, hyperscaler complexity can become overhead rather than advantage.

When your architecture is composable, built from best-of-breed APIs rather than monolithic services, you need infrastructure that's equally distributed and optimised for speed.

and what about Vercel..?

Everybody is talking about Vercel, yes we know and we definitely understand why, it's a very neat platform with strong performance specifically when your using Next.js. Just look at our work for be•at venues (Sportpaleis) which easily handles 1000's of fans when they proceed to ordering tickets for Pommelien Thijs.

However, for starters Next.js is not the only framework in the market and yes Vercel is also widening its view with Nuxt for instance, but still is very much into its ecosystem and obviously that has its benefits as well.

But whilst we appreciate Vercel's developer experience, it's ultimately a sugar on top of AWS (amongst others) with beautiful constraints that lock you somewhat into Vercel's interpretation of serverless with less control over infrastructure. Vercel relies on AWS as their primary cloud provider for their global edge network and guess what Cloudflare for CDN and DNS services.

For enterprise clients needing custom routing or additional compliance requirements, this abstraction becomes limiting, which is why Cloudflare gives you the Vercel's developer experience with additional infrastructure flexibility.

5 Reasons we choose Cloudflare for modern applications

Here's our top 5 reasons why we like Cloudflare a lot. True bromance, if you like.

1. True Global Distribution - Your application runs at 300+ edge locations automatically. For X2O's expansion, this meant consistent equally fast response times from Amsterdam to Paris without multi-region complexity.

2. AI at the Edge - Whilst others build AI in centralised data centres, Cloudflare runs inference at the edge enabling AI-powered personalisation that happens within milliseconds from users, not hundreds of milliseconds away.

3. Cost Predictability - Pay for requests, not idle infrastructure. All of our clients saw a clear cost reduction compared to traditional cloud deployments and previous providers.

4. Security Built-In - Enterprise-grade DDoS protection, WAF, and bot management by default. No separate CloudFront, WAF, and Shield configurations or likewise Azure equivalent with FrontDoor, WAF, etc..

5. Composable Architecture Sweet Spot - Unlike traditional cloud services that require complex orchestration, these components work together seamlessly, everything designed for modern, distributed applications that need to scale globally without infrastructure complexity.

Cloudflare's services align perfectly with composable thinking:

Workers (AI) (serverless functions at the edge) handle your application logic

KV (Key-Value store) manages distributed configuration and session data across all edge locations,

R2 (Cloudflare's S3-compatible object storage) stores assets and media files at a fraction of AWS costs

D1 (SQLite databases at the edge) enables fast data queries without round-trips to central databases.

And what about AI?

AI is moving to the edge, and Cloudflare is well-positioned with Workers AI, enabling you to extend edge-native applications with AI processing power distributed globally. While AWS Bedrock and Azure's AI services require round-trips to centralised regions, Cloudflare Workers AI runs machine learning models at every edge location:

Real-time personalisation without latency - AI decisions happen locally, not after database queries

AI-powered A/B testing that adapts in milliseconds - Content optimisation based on user behaviour in real-time

Content optimisation at delivery time - Dynamic image processing, text generation, and content adaptation

Privacy-compliant processing that never leaves the user's region - AI inference happens at the edge closest to your users

The breakthrough: Your edge-native applications can now include AI features, recommendation engines, content generation, dynamic personalisation, without sacrificing the speed advantage that makes edge architecture compelling in the first place.

When we still choose hyperscalers

Cloudflare isn't always the right fit. We often still recommend AWS/Azure (or others) for complex data pipelines, heavily regulated industries (extended compliance), massive compute requirements, legacy system integration or if they are your strategic platform of choice.

The insight

Modern architectures often combine both, edge-native front-ends on Cloudflare with data processing on hyperscalers like AWS, GCP or Azure.

The edge-native advantage: beyond technology, it's strategy

The shift to edge-native isn't just an infrastructure decision, it's strategic positioning for the next decade of digital business. Whilst competitors wrestle with traditional cloud complexity, edge-native applications deliver the instant, AI-powered experiences customers now expect as standard.

We've witnessed this transformation firsthand: enterprises embracing edge-native architecture don't just improve technical metrics, they fundamentally change how quickly they enter new markets, adapt to customer behaviour, and deliver personalised experiences at scale.

The question isn't whether edge-native will become standard, it's whether your business will lead the transition or follow it.

Cloudflare provides the foundation: application logic running globally, AI processing at the edge, costs that scale with success, and security that protects growth. For composable, headless, and AI-driven applications, this isn't just better infrastructure, it's competitive advantage built into your architecture.

Want to explore what Cloudflare can mean for your business?

Get in touch